[ad_1]

August 5 was not a normal day for Kaicheng Yang. It was the day after a US court published Elon Musk’s argument on why he should no longer have to buy Twitter. And Yang, a PhD student at Indiana University, was shocked to discover that his bot detection software was at the center of a titanic legal battle.

Twitter sued Musk in July, after the Tesla CEO tried to retract his $44 billion offer to buy the platform. Musk, in turn, filed a countersuit accusing the social network of misrepresenting the numbers of fake accounts on the platform. Twitter has long maintained that spam bots represent less than 5 percent of its total number of “monetizable” users—or users that can see ads.

According to legal documents, Yang’s Botometer, a free tool that claims it can identify how likely a Twitter account is to be a bot, has been critical in helping Team Musk prove that figure is not true. “Contrary to Twitter’s representations that its business was minimally affected by false or spam accounts, the Musk Parties’ preliminary estimates show otherwise,” says Musk’s counterclaim.

But telling the difference between humans and bots is harder than it sounds, and one researcher has accused Botometer of “pseudoscience” for making it look easy. Twitter has been quick to point out that Musk used a tool with a history of making mistakes. In its legal filings, the platform reminded the court that Botometer defined Musk himself as likely to be bot earlier this year.

Despite that, Botometer has become prolific, especially among university researchers, due to the demand for tools that promise to distinguish bot accounts from humans. As a result, it will not only be Musk and Twitter on trial in October, but also the science behind bot detection.

Yang did not start Botometer; he inherited it. The project was set up around eight years ago. But as its founders graduated and moved on from university, responsibility for maintaining and updating the tool fell to Yang, who declines to confirm or deny whether he has been in contact with Elon Musk’s team. Botometer is not his full-time job; it’s more of a side project, he says. He works on the tool when he’s not carrying out research for his PhD project. “Currently, it’s just me and my adviser,” he says. “So I’m the person really doing the coding.”

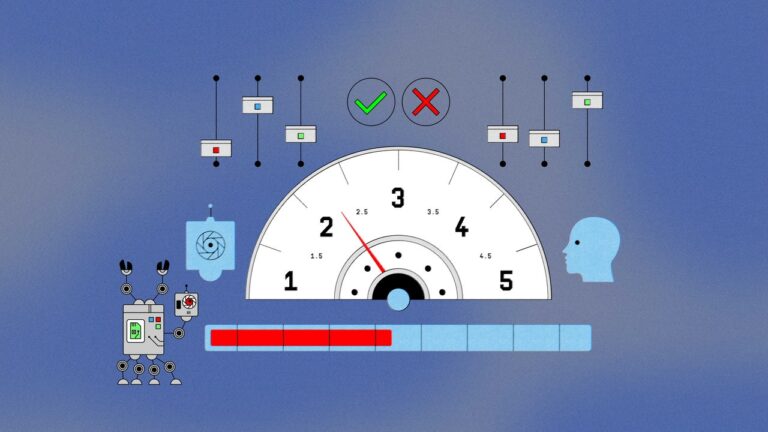

Botometer is a supervised machine learning tool, which means it has been taught to separate bots from humans on its own. Yang says Botometer differentiates bots from humans by looking at more than 1,000 details associated with a single Twitter account—such as its name, profile picture, followers, and ratio of tweets to retweets—before giving it a score between zero and five. “The higher the score means it’s more likely to be a bot, the lower score means it’s more likely to be a human,” says Yang. “If an account has a score of 4.5, it means it’s really likely to be a bot. But if it’s 1.2, it’s more likely to be a human.”

Crucially, however, Botometer does not give users a threshold, a definitive number that defines all accounts with higher scores as bots. Yang says the tool should not be used at all to decide whether individual accounts or groups of accounts are bots.He prefers it be used comparatively to understand whether one conversation topic is more polluted by bots than another.

Still, some researchers continue to use the tool incorrectly, says Yang. And the lack of threshold has created a gray area. Without a threshold, there’s no consensus about how to define a bot. Researchers hoping to find more bots can choose a lower threshold than researchers hoping to find less. In pursuit of clarity, many disinformation researchers have defaulted to defining bots as any account that scores above 50 percent or 2.5 on Botometer’s scale, according to Florian Gallwitz, a computer science professor at Germany’s Nuremberg Institute of Technology.

[ad_2]

Source link