[ad_1]

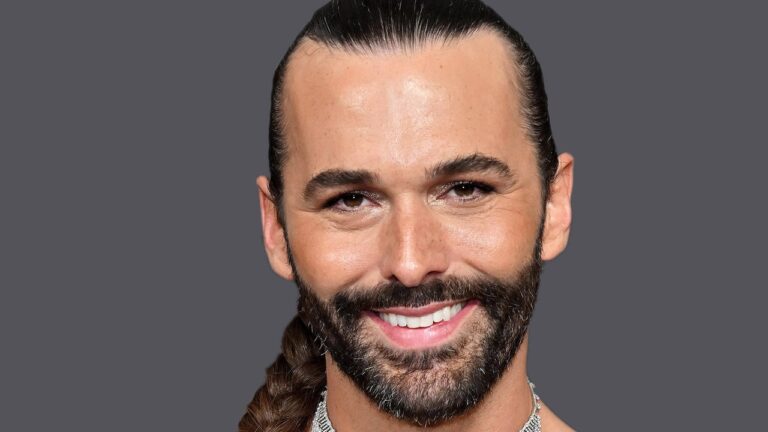

When the podcast Getting Curious with Jonathan Van Ness first dropped in 2015, it was with an episode titled, “What’s the Difference Between Sunni and Shia Muslims and Why Don’t They Love Each Other?” in which the then Gay of Thrones star spoke with a UCLA professor to hash out centuries of complicated strife. The show was a modest success, but three years later Van Ness was nabbed by Netflix to become one of Queer Eye’s new Fab Five and found himself suddenly beloved by millions of new fans.

Since then, Van Ness has written a memoir, a children’s book, and an essay collection; been nominated for multiple Emmys; spent time in DC lobbying for LGBTQ+ rights; come out as both non-binary and HIV positive; begun touring a live show that blends stand-up and gymnastics; and even launched his own line of hair care products. He’s also turned the podcast into a Netflix show.

Through it all, though, Van Ness has still made time for his podcast, with Getting Curious dropping its 300th episode last week. Topics along the way have ranged from fatphobia to The Great British Bake Off, with Van Ness maintaining that he’ll cover anything as long as he’s genuinely interested in it. “I’m just so curious about us in the United States, how things got this way, and how we became who we became,” says Van Ness. “I feel like I’ve grown up with the show, and so much of what I know about life, I’ve learned while recording this podcast.”

With an eye toward sharing some of that education, Van Ness whittled down the massive Getting Curious library to his nine favorite episodes, choosing a selection for WIRED that he hopes “turn more people on to their passions.”

Van Ness: [Data journalist] Meredith Broussard’s work is something I reference a lot. Techno-chauvinism on the whole is the idea that machines know how to do things better than humans. She used the example of a set of automatic blinds. It’s nice to press a button and make it go up, but when it breaks, you can’t fix it. Whereas if you had manual blinds, you’d just go over and lower the blinds with the string and it would be working fine. It would be easier to fix.

A more important example that she talks about is her algorithmic bias work, like how a police scanner or facial recognition system can’t identify a gender nonconforming person. A lot of these algorithms are a reflection of the people who make them and more often than not, the people who make these algorithms are men. The people who make the algorithms aren’t really diverse. It’s not encouraged to bring up issues like that within those spaces and the dissents tend to get unceremoniously quashed.

So, techno-chauvinism is baked into the systems that influence our day-to-day lives in really important ways. If you’re at a TSA scanner, for instance, you might get pulled out of a line because you’re listed as a man but you’re wearing a longer shirt, so you may get a pat down that someone else may not get, just because those algorithms don’t know how to recognize the nuance that a human eye would be able to identify.

Van Ness: Tina Lasisi is an evolutionary biologist and studies human hair variation, but she also studies how we got here, like the evolution of human hair variation and scalps. So much of what I learned in hair school about why curly hair is curly, why wavy hair is wavy, why straight hair is straight … it’s all a lie. It’s not even true. In hair school we’re told that if your hair is coily, that it’s more kidney bean shaped. Curly hair is more like an oval, and then straight hair is more like a perfect circle. But in her work at the lab, they’ve actually found every single type of hair in all of those shapes.

The really scary thing about it is that all of this false hair science was used in crime scene investigation in the ’80s and ’90s, like, “This hair was there and because it’s kidney bean shaped we know it was a Black person.” It’s more nuanced than that.

[ad_2]

Source link